The Secret to Getting Better Answers from Language Models

My mom is my best friend. She lives in India and works as a school teacher, but she always takes a keen interest in my blogs. Today, she asked me how my Agentic AI blog was coming along. I couldn’t help but laugh a little - it was so sweet. She genuinely listens to me! :p

I told her I’m starting by writing about Prompt Engineering because, in the world of Agentic AI, that’s like learning your ABCs. And then she goes, “What’s that?”

So, let me try to explain how I explained it to her.

What does Prompt Engineering mean?

I’m sure you’ve interacted with ChatGPT before. When you ask it questions, sometimes the response isn’t exactly what you wanted. So, you tweak or rephrase your question to get a better answer.

Prompt engineering is basically the art and science of crafting these questions (or prompts) in a way that tools like ChatGPT can understand.

For example, if you ask ChatGPT,

Give me a 4-day itinerary for Florence

It’ll give you a plan. But it might be generic and not really tailored to your style. Now, if you say,

You are a tour agent. Help me plan a 4-day itinerary for me and my partner in Florence, Italy. We enjoy wine tasting, and cooking experiences. We don’t want to visit too many museums but want to experience authentic Tuscan culture and food. Our budget is XYZ.

Then ChatGPT will likely deliver a much better, more personalised itinerary because you’ve given it a clear role and detailed info about who’s travelling, what you want, and what your budget is.

My mom was very happy with this explanation. But also quite surprised when I told her that Prompt Engineering is actually a real job these days.

Writing prompts might sound straightforward, but there’s actually a surprising amount of skill and technique behind it.

So, let’s get a bit technical now and learn how to write an effective prompt. We’ll also explore some popular prompting techniques that can help you get the best out of AI tools like ChatGPT.

We will cover:

- Five Steps to Design an Effective Prompt

- Popular Prompting Techniques

- Prompt Chaining

- Chain of Thought (CoT) Prompting

- Tree of Thought (ToT) Prompting

- Self Consistency

- ReAct: Reason + Act

- Directional Stimulus Prompting

Five Steps to Design an Effective Prompt

To write an effective prompt, you should focus on the five elements listed below:

- Task: Clearly state what you want the LLM to do (Create a 3-day itinerary for Florence, Italy)

- Context: Provide all relevant background information to help the AI understand your needs (Mention who’s travelling, what activities you enjoy, any preferences or restrictions)

- References: Give examples or point to specific places or experiences you want included. (Include a visit to a Tuscan vineyard, recommendations for local trattorias)

- Evaluate: After receiving the AI’s response, assess whether it meets your expectations. (Does the itinerary balance activities and relaxation as you wanted)

- Iterate: If the response isn’t quite right, refine your prompt and try again. (You might add more detail, like asking for fewer crowded spots, local gelatarias)

One handy way to remember this is by using a mnemonic, something simple that sticks in your head.

Thoughtfully Craft Really Excellent Inputs

If you’re still not getting the right response, try these tricks to refine your prompt further:

- Break down your request into smaller, simpler sentences

- Try an analogous prompt

- Add constraints to narrow the focus (we will be staying near the Duomo in Florence)

Popular Prompting Techniques

Now that we’ve covered the basics of writing a good prompt, let’s deep dive into some of the advanced techniques that can help you get even better, more accurate, and more nuanced responses from LLMs.

Prompt Chaining

Prompt chaining means breaking a big task into smaller prompts, then using the output from one step as the input for the next. This helps to make the problem-solving more structured and easier to debug.

As a data analyst, when you look at the data, you first observe the data, then analyse it, and finally provide insights and suggestions.

Now you have to enable the LLM to do this for you. So instead of dumping your raw data into GPT and saying 'Analyse it,' use prompt chaining to guide it through the steps.

Prompt 1:

Here's a dataset of a company's performance over four years. Please describe what this dataset contains and what each column represents.

Prompt 2:

Based on this dataset, highlight any noticeable trends across the years.

Prompt 3:

Based on these trends, what business insight or recommendation would you suggest?

So you set the context, break down the patterns, and interpret the meaning. This is why prompt chaining is often a lot more effective, especially for complex tasks.

Chain of Thought (CoT) Prompting

Sometimes, getting the right answer means walking through the steps. In Chain of Thought prompting, we ask the LLM to explain the thought process it used to reach the answer. This is especially useful for mathematical problems and other logical questions.

Here’s a real example from when I was feeling too lazy to do the math myself:

Prompt I gave:

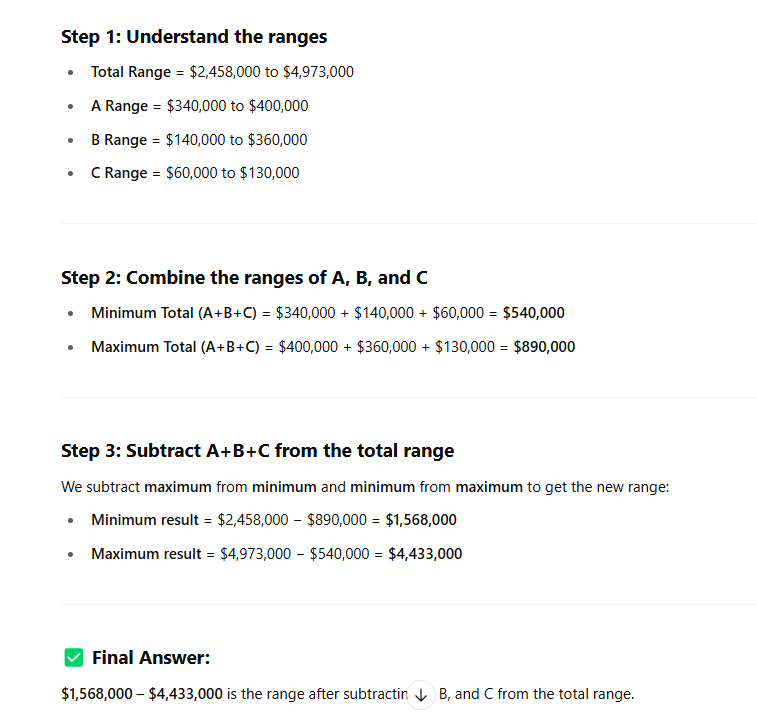

Subtract A ($340,000–$400,000), B ($140,000–$360,000), and C ($60,000 - $130,000) from $2,458,000–$4,973,000. Think step by step.

Answer I got:

If I hadn’t asked it to “think step by step,” I wouldn’t have noticed it made a mistake in the last calculation. I wanted it to subtract the minimum amounts from the lower limit total and the maximum amounts from the upper limit total, but it mixed this up.

This is why asking the AI to explain its thought process improves both trust and accuracy, making sure you get the answer you expect.

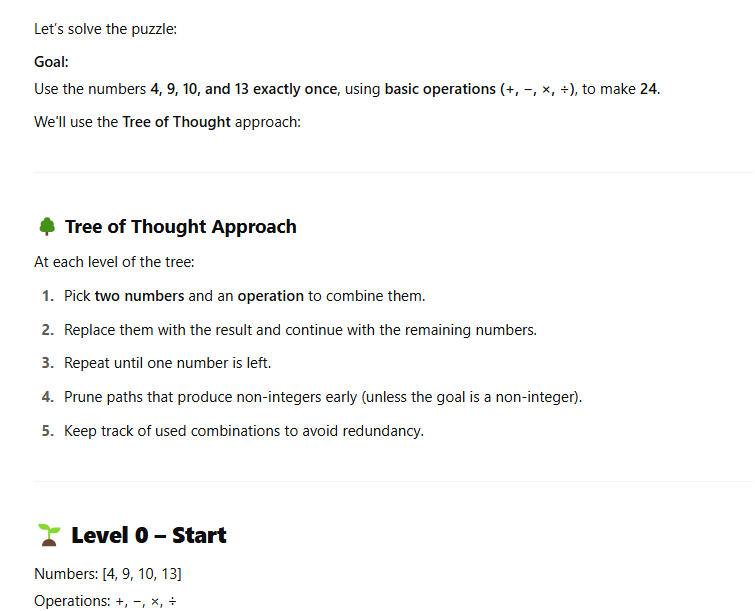

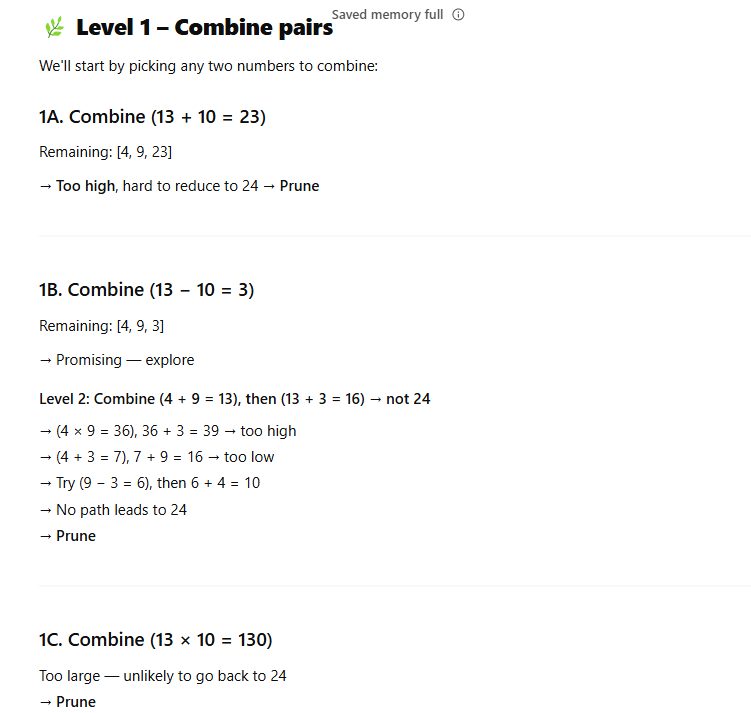

Tree of Thought (ToT) Prompting

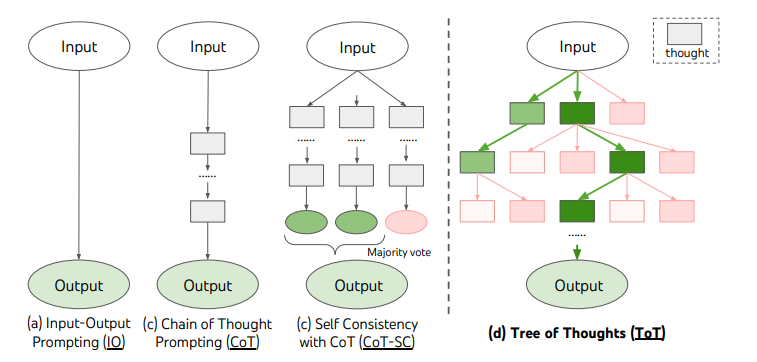

Tree of Thought prompting is a slightly more advanced technique than chain of thought. While CoT helps the LLM “think out loud” in a straight line, ToT lets the LLM generate multiple intermediate steps or solution paths (like branches of a tree), evaluates them, and prunes those that aren’t promising. This approach helps the model tackle complex, multi-step problems more effectively.

But how does it know which path to pick? What's the evaluation criterion?

There are two main ways:

- Independent Scoring: The model gives each thought a score or probability like rating it from 1 to 10 on how likely it is to lead to a good solution.

- Voting/Comparative Selection: The model compares several thoughts side-by-side and picks the one that looks most promising. For example, it might ask, “Among these three options, which do you think is best, and why?”

Based on the evaluations, the model either:

- Explores multiple top-ranked thoughts (breadth-first algorithm), or

- Follows the most promising one deeply (depth-first algorithm), but can backtrack if the path fails.

By default, GPT tries to solve problems quickly and linearly unless directed otherwise. So if you want the GPT to use the Tree of Thought approach, you would need to explicitly mention that.

Prompt:

Use a Tree of Thought approach to solve this: Use the numbers 4, 9, 10, and 13, and any basic operations (+, −, ×, ÷) to make 24. Each number must be used exactly once. Show your reasoning tree step by step, including possible branches and pruning.

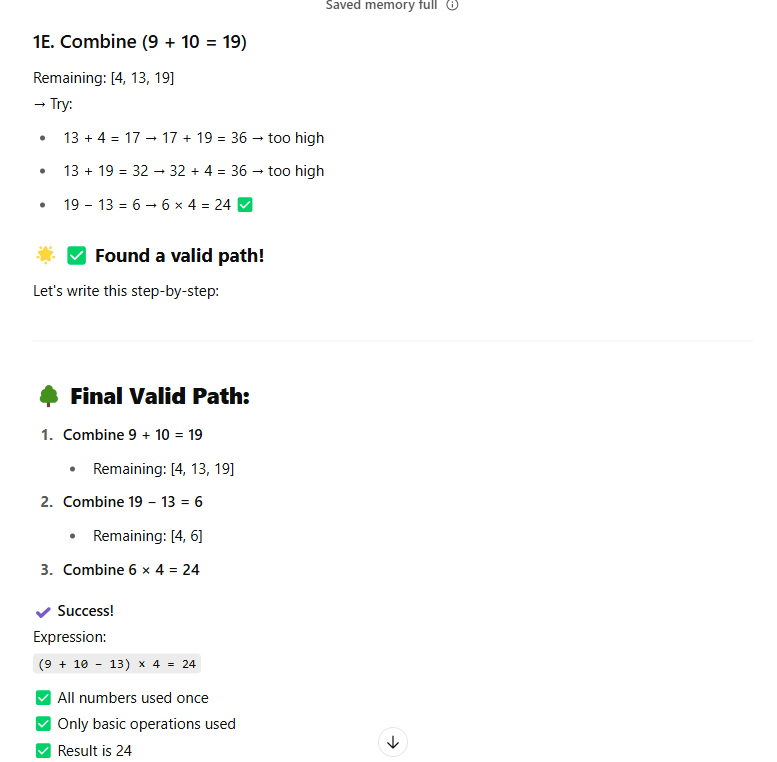

Answer I got:

Isn't it amazing? It evaluated all the permutations and combinations. Imagine using this for doing scenario modelling at work. So powerful.

Self Consistency

Instead of relying on one response, you ask GPT the same question multiple times and then look at the most common answer. The LLM will give slightly different answers each time (especially when reasoning), and this is why using this technique can reduce the chance of errors that happen due to one-off randomness. It can also help uncover better reasoning paths that the first response might miss.

It is powerful to combine this approach with CoT to show its steps each time, and pick the most frequent correct answer. This would improve your confidence in the final output.

Below is a visualisation to illustrate the difference between CoT, ToT and Self Consistency.

ReAct: Reason + Act

Another powerful prompting technique is ReAct, which stands for Reason + Act. While Chain of Thought (CoT) focuses on guiding the model to think step-by-step before producing a final answer, ReAct goes a step further by enabling the model to alternate between reasoning and taking actions. These actions can include anything like searching for information on the web, performing a calculation, querying a database, or calling a function. This is especially useful when the LLM lacks all the necessary information upfront.

Example:

CoT Prompt:

Who is the current CEO of OpenAI? Think step by step.

Response (Chain of Thought style):

I know that OpenAI is a research lab in AI. I recall that Sam Altman has been its CEO for a long time. So the current CEO of OpenAI is likely Sam Altman.

But what if the model isn’t sure, or it’s out of date? It will guess.

ReAct Prompt (structured like an AI agent):

Question: Who is the current CEO of OpenAI?

Thought: I need to look this up online to be sure.

Action: Search["Current CEO of OpenAI"]

Observation: Sam Altman is listed as CEO in recent articles.

Final Answer: The current CEO of OpenAI is Sam Altman.

The model doesn’t just reason, it acts (like searching the web) and then uses the result to answer accurately.

Directional Stimulus Prompting (DSP)

Another interesting and lesser-known prompting technique is Directional Stimulus Prompting.

It means providing the model with specific hints or keywords that guide the response more precisely, improving relevance and quality compared to a generic prompt.

For example, let's say you want to summarise this report

"The quarterly sales report shows a 15% increase in revenue for the Northeast region, driven largely by a successful product launch in January. Customer feedback highlighted strong satisfaction with the new features. However, supply chain delays impacted the Midwest region, causing a 5% decline in sales. The company expects to resolve these issues by the next quarter."

Standard Prompt:

Summarize the above report in 2-3 sentences.

DSP Prompt

Summarize the above report in 2-3 sentences based on the hint.

Hint: Northeast region; 15% revenue increase; product launch in January; Midwest region; 5% sales decline; supply chain delays.

The second would produce a response that addresses the exact points you are interested in. This technique reduces noise or irrelevant content in the output and helps get consistent and targeted summaries or analyses.

Closing

Thank you for reading this blog till the end. Hope you learnt something new and have a clearer understanding of the fundamentals and techniques of writing better prompts. Next time you interact with a language model, whether it’s for writing, analysis, coding, or creativity, think about what technique would work the best. That small step can make a big difference in the quality of your results.

Related Blogs

References:

- IBM. What is Chain of Thought (CoT) prompting?

- Prompt Engineering Guide. A Comprehensive Overview of Prompt Engineering – Nextra

- IBM. 4 Methods of Prompt Engineering – YouTube

- Yao, S., Yu, D., Zhao, J., Shafran, I., Griffiths, T. L., Cao, Y., & Narasimhan, K. (2023). Tree of Thoughts: Deliberate Problem Solving with Large Language Models

- OpenAI Documentation. Prompt Engineering – Text Generation Guide